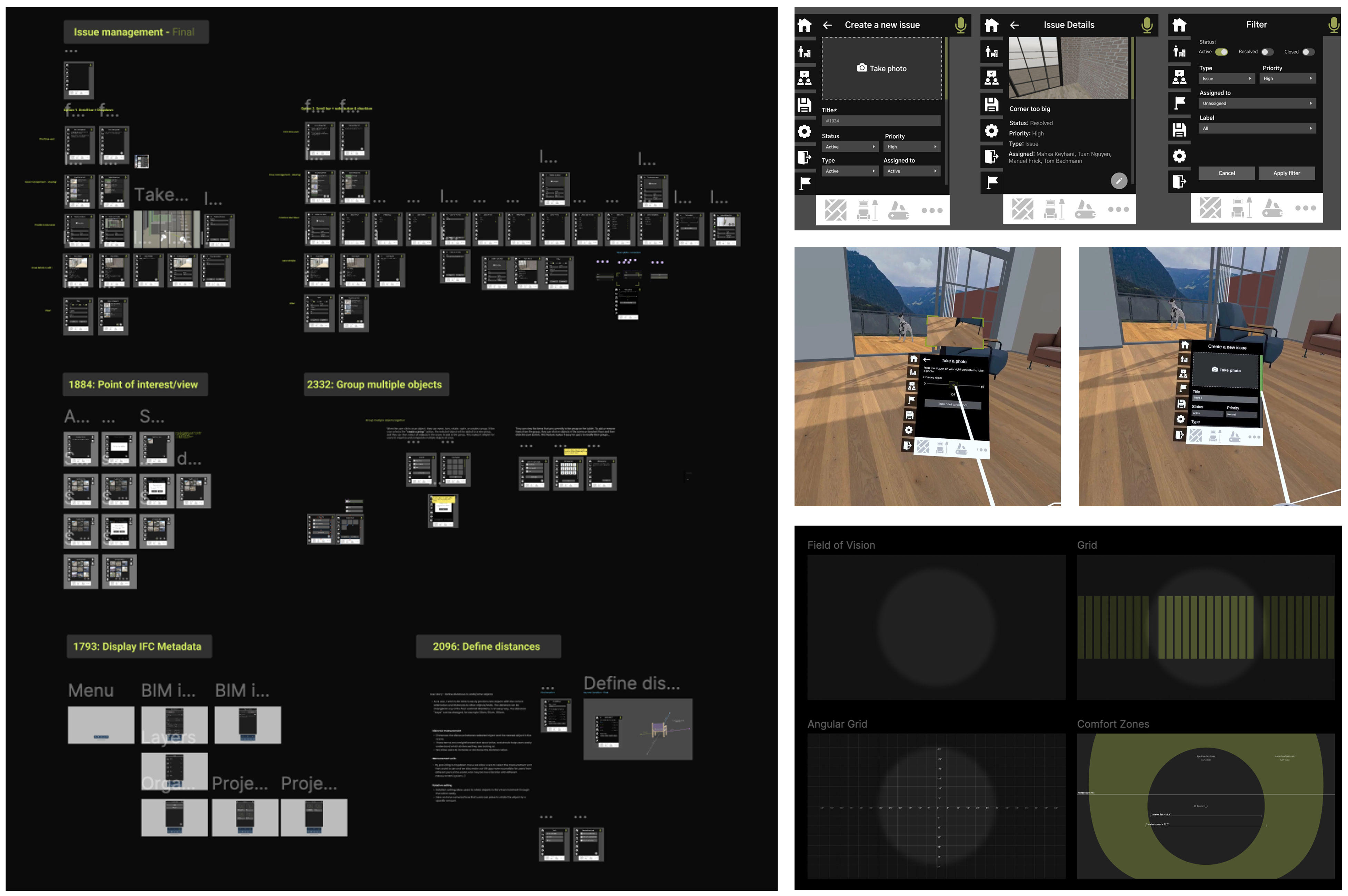

Issue management in VR

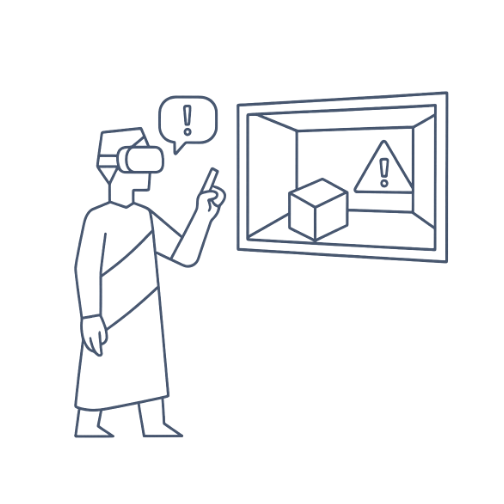

Problem

Teams could explore the BIM model in VR, but as soon as they noticed an issue, they had to take off the headset, open a laptop, and and write it down somewhere else. That break in flow meant:

• Context was lost—screenshots and coordinates weren’t tied to the comment.

• Notes lived in emails, PDFs, or sticky notes, so issues slipped through the cracks.

• Decisions stalled because designers, engineers, and site crews never saw the same information at the same time.

In short, the lack of an in-headset issue tracker turned a powerful VR review into a slow, error-prone feedback loop.

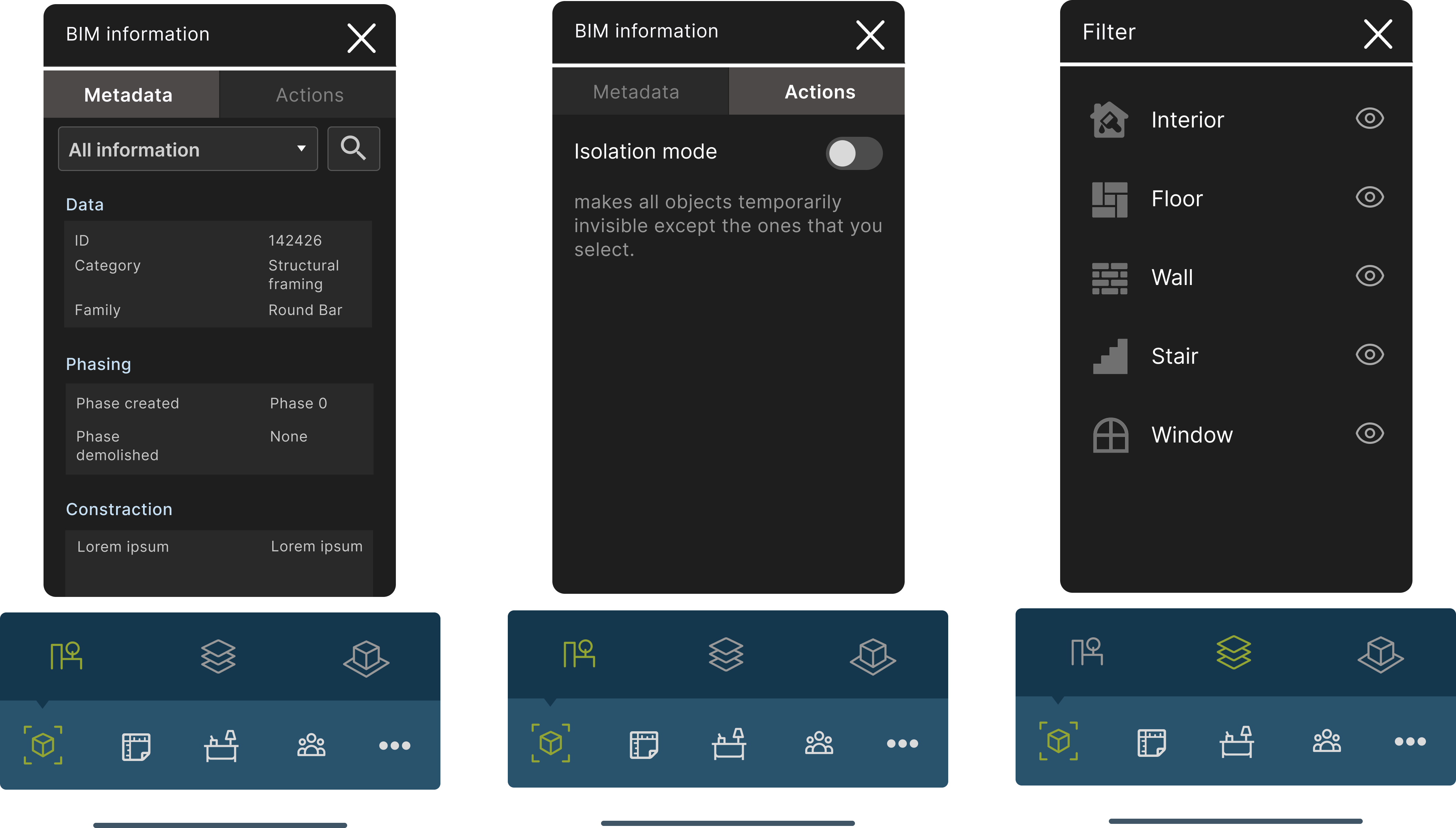

Goals

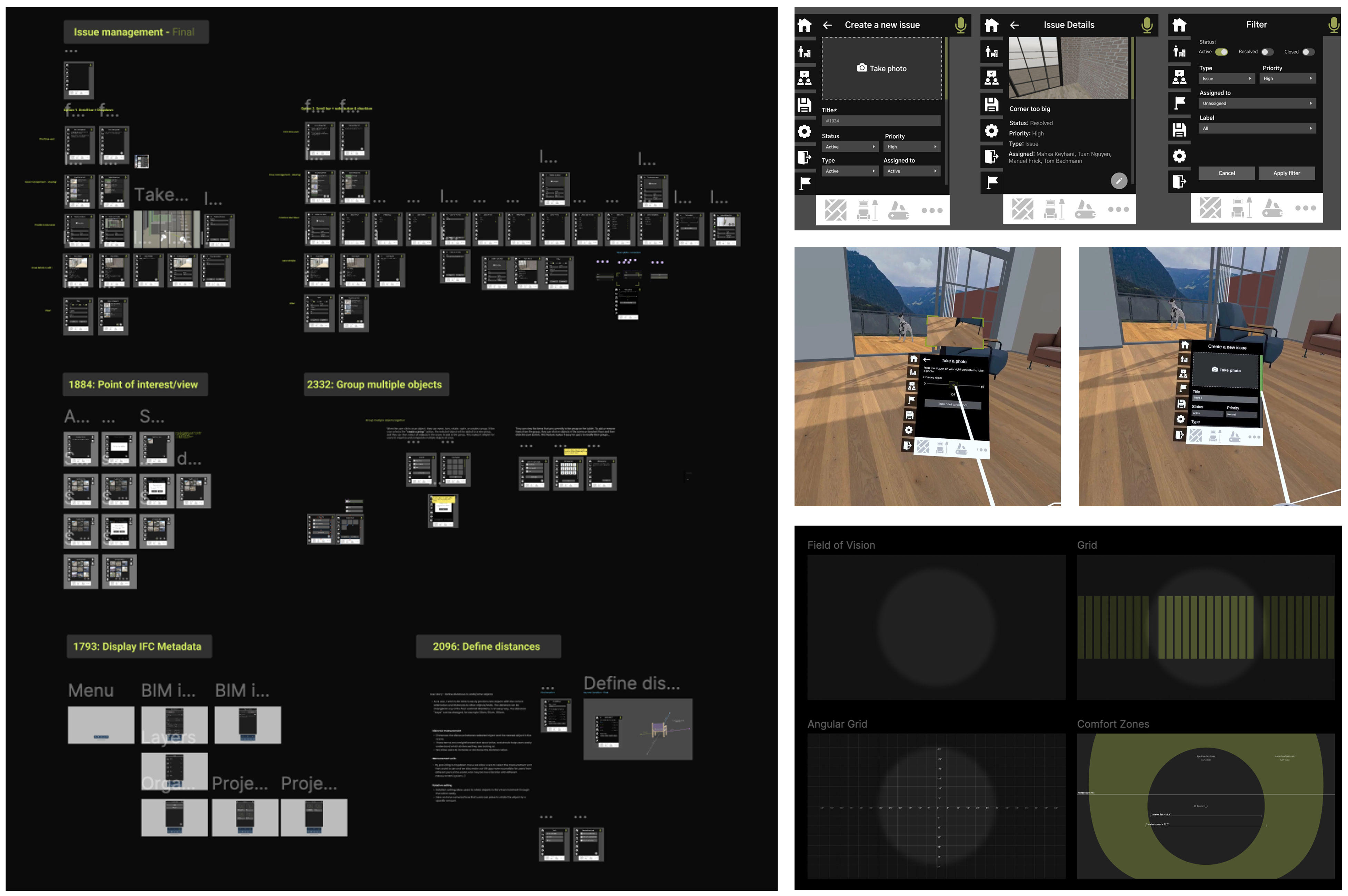

Allow anyone inside the VR model to flag, describe, and share an issue in under 60 seconds, using just one controller. The flow includes all key BIMcollab fields (title, status, priority, assignee, labels) and a familiar tablet-style menu, so feedback stays in context and flows straight into the coordination workflow.

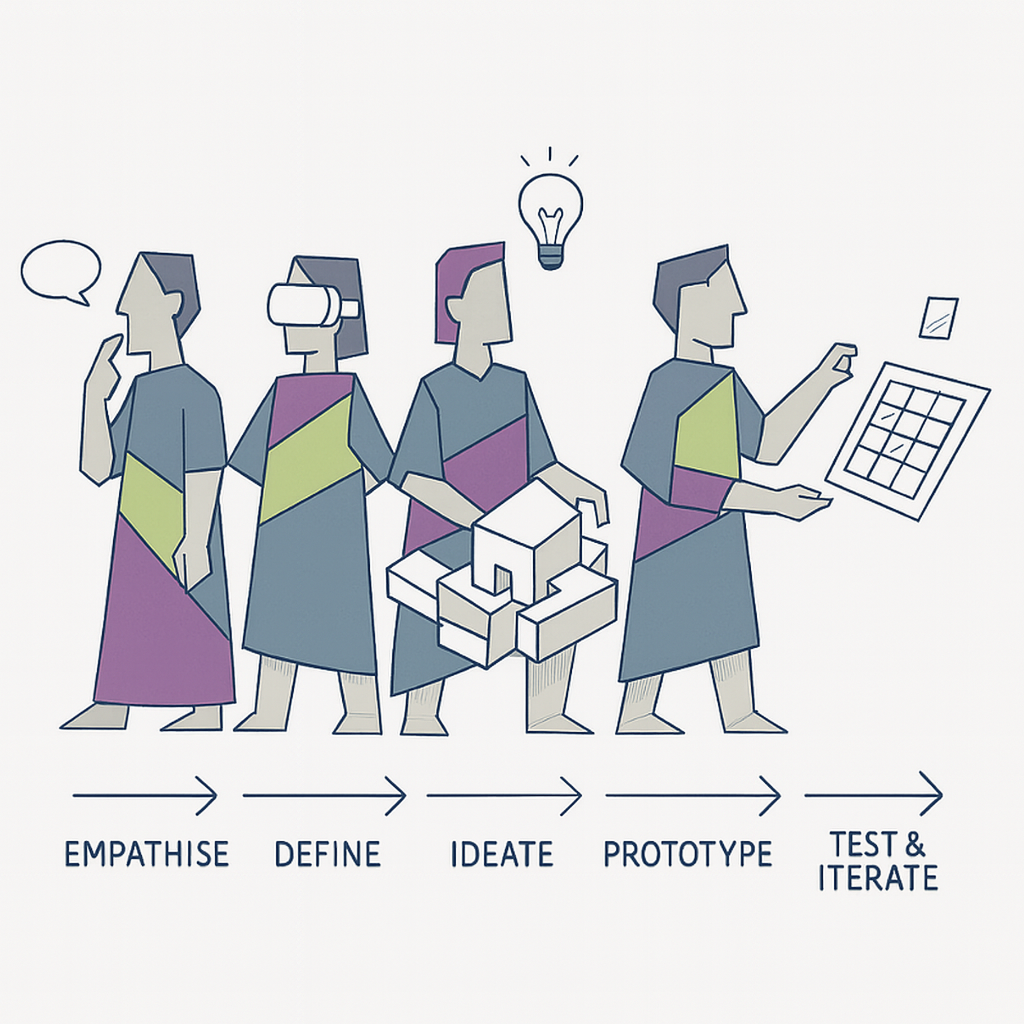

Design Process & Approach

- User research – Interviewed architects, real estate developers, and site managers to map their “find it, note it, fix it” workflow and uncovered the biggest pain point: breaking immersion to report issues on a laptop.

- Competitive analysis – Reviewed VR/AR and BIM tools (Revizto, Navisworks, Resolve, etc.) to see how they handle issue tracking, and where they fall short in VR.

- Rapid prototyping – Sketched flows in FigJam, built hi-fi prototypes in Figma, then teamed up with engineering to check controller reach, text readability, and photo-capture timing on an internal in-headset build.

- Usability testing – Ran two rounds with 10 mixed-role users. Iterated on button sizing, field defaults, and added a voice-note shortcut for gloved users.

- Dev handoff & QA – Delivered prefab specs and guidelines for Unity devs, and verified headset UI–BIMcollab sync in staging.

Impact

- 40-second capture - Logging an issue now takes ~40 seconds instead of a couple-minute laptop detour.

- Higher first time success - participants were able to create and submit a ticket on their first attempt.

- Faster triage in the CMS - Status, priority and assignee map to BIMcollab, so reviewers filter and hand off work more quickly.

- Report issues without breaking immersion – Teams can log an issue end to end in headset, no laptop detour.

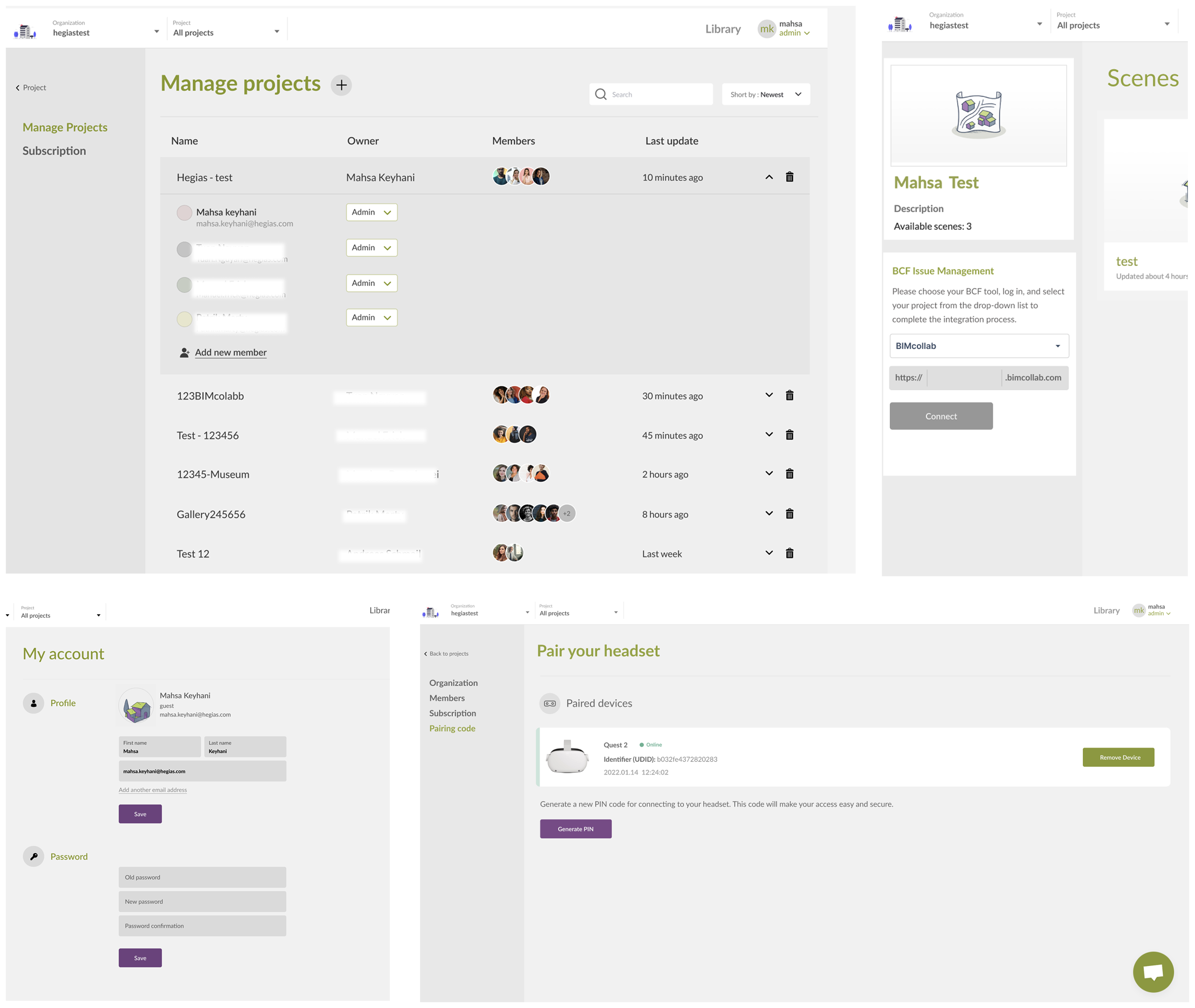

Other Projects

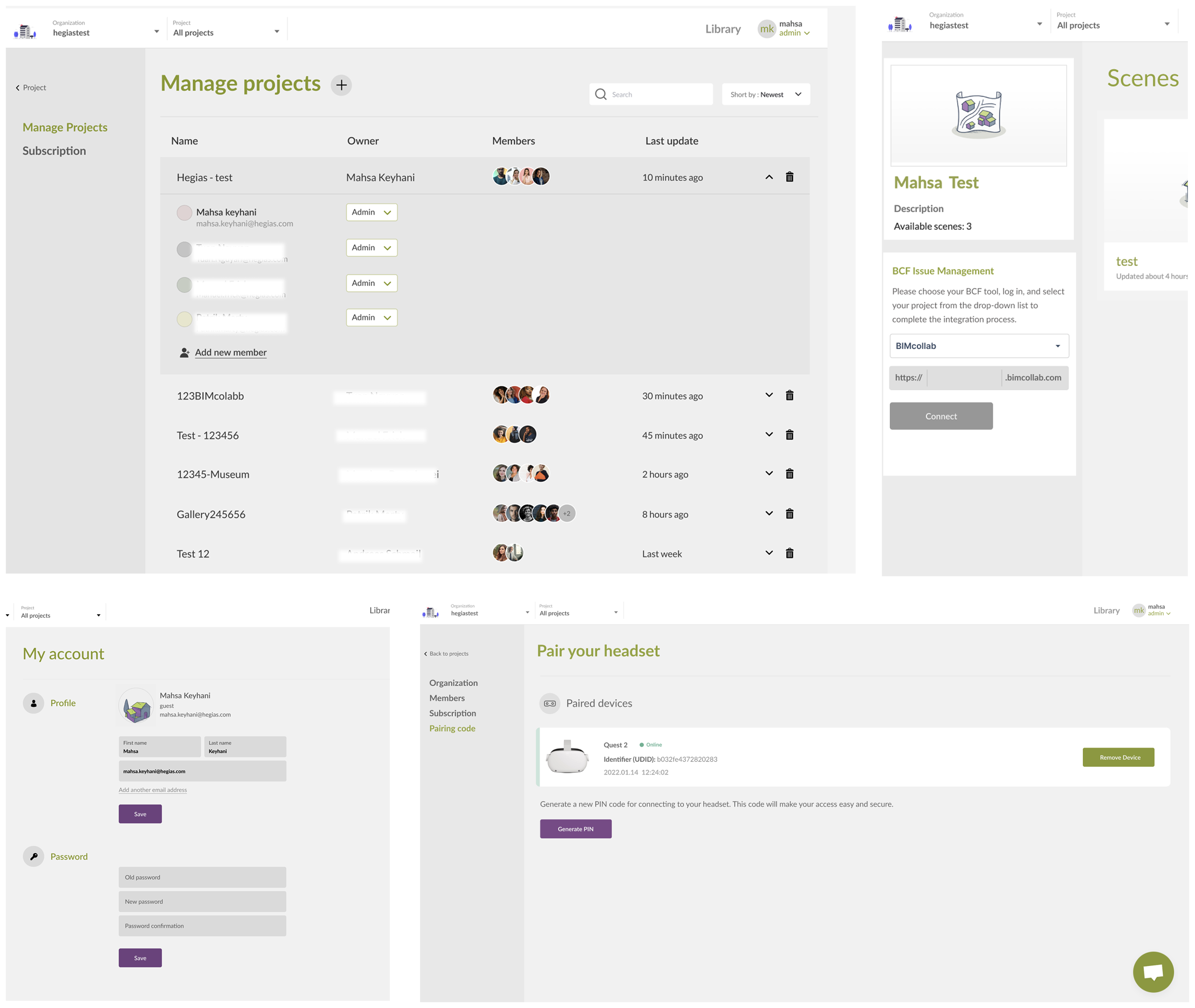

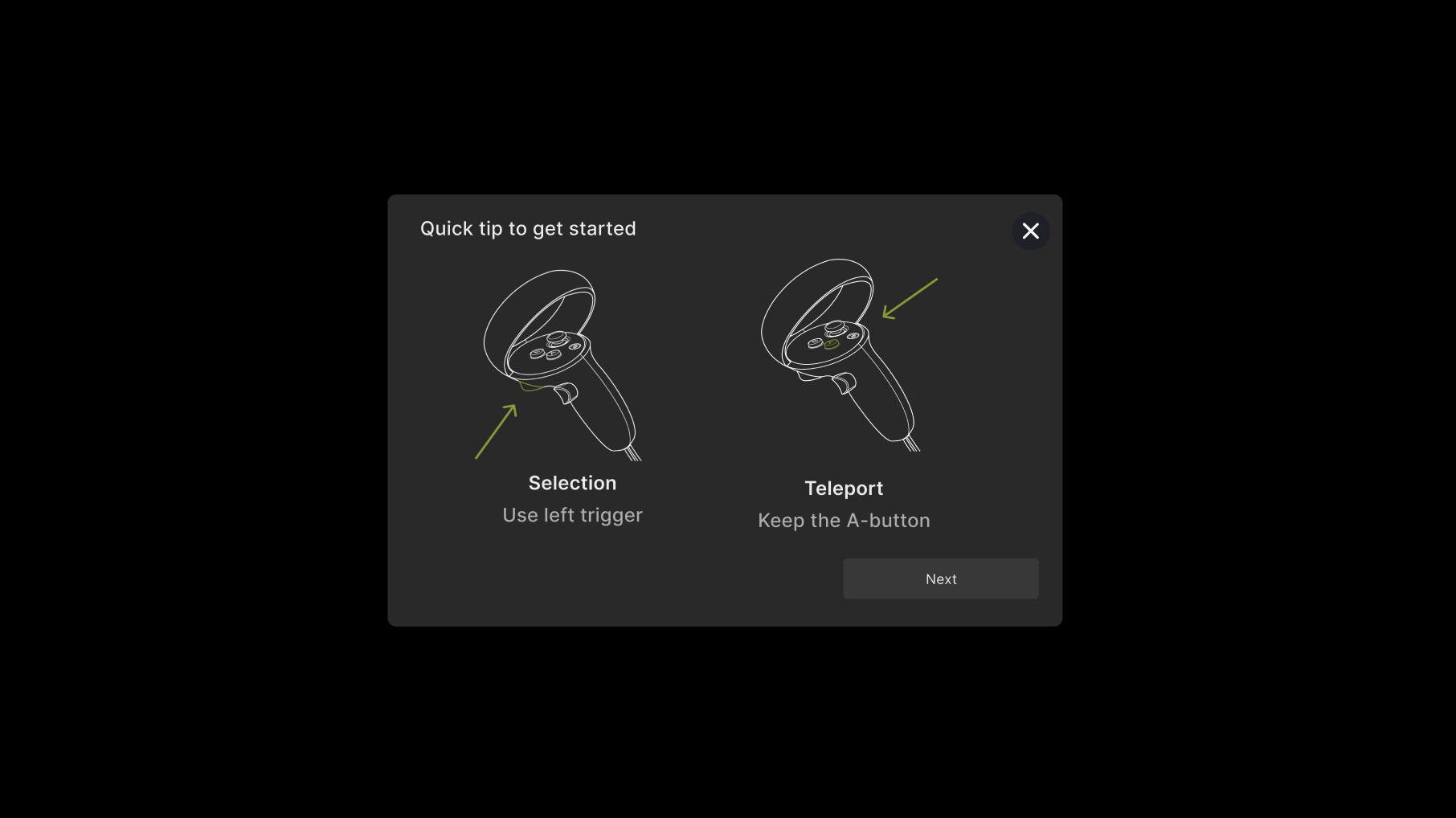

As part of the HEGIAS CMS redesign, I worked on key flows that connect VR projects to BIMcollab—including device pairing, user management, and account settings. I also designed an in-VR tutorial to help users get started smoothly. My focus was on clarity, consistency, and turning complex actions into intuitive experiences for both admins and guests.

Client project: Kirchner Museum

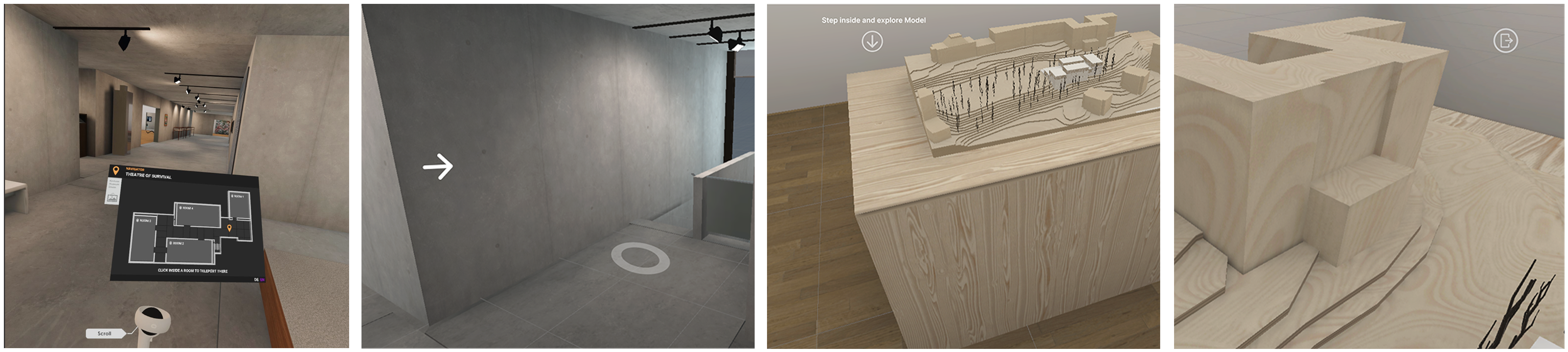

Problem

Visitors enjoyed the life-size VR gallery, but many got disoriented or missed artworks—and some felt “stuck” inside the model.

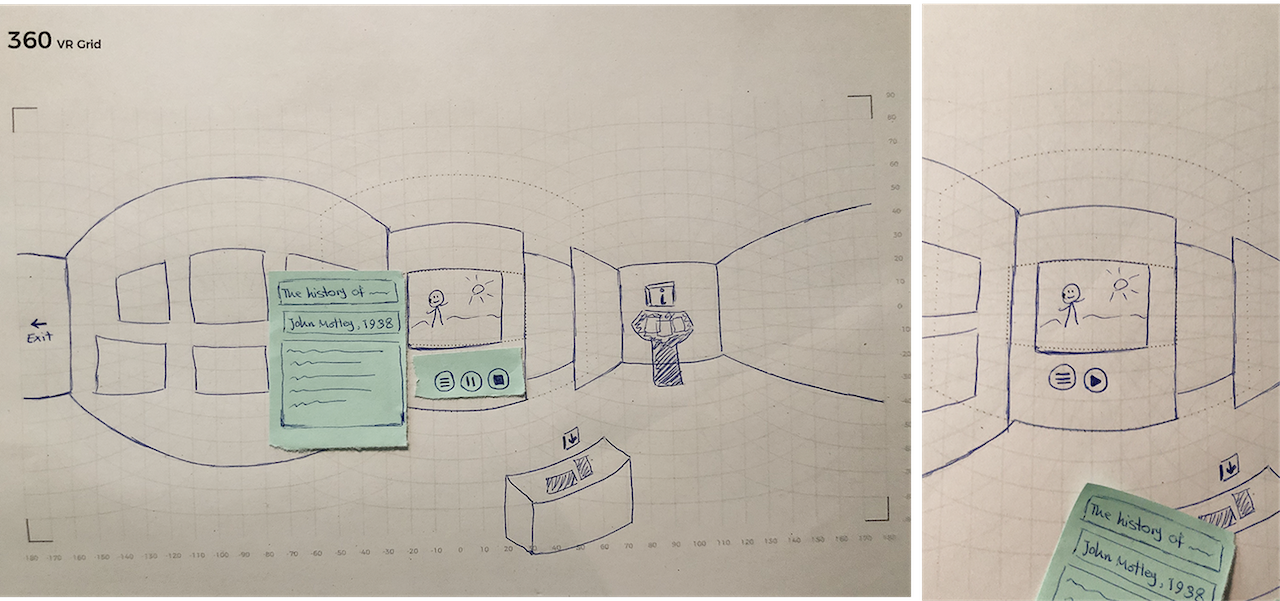

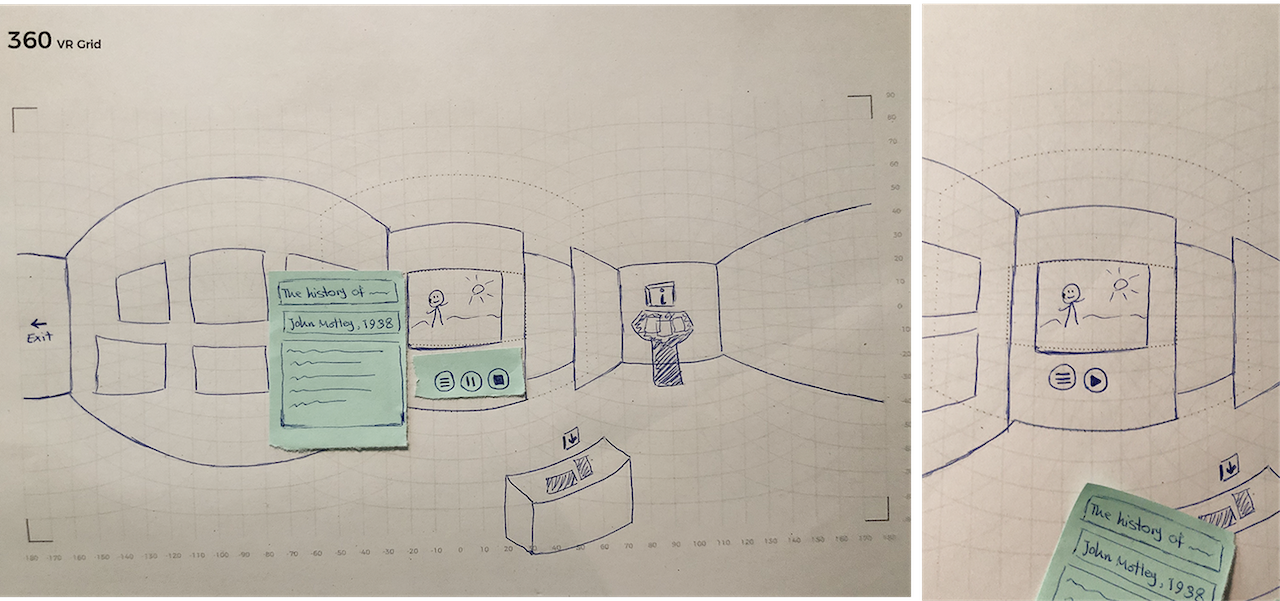

From doodle to headset ✏️ → 🥽

Early storyboard sketches on a 360° VR grid helped me map sightlines and UI placement before I even opened Figma. The final navigation cues you see in the headset still follow those original pencil lines.

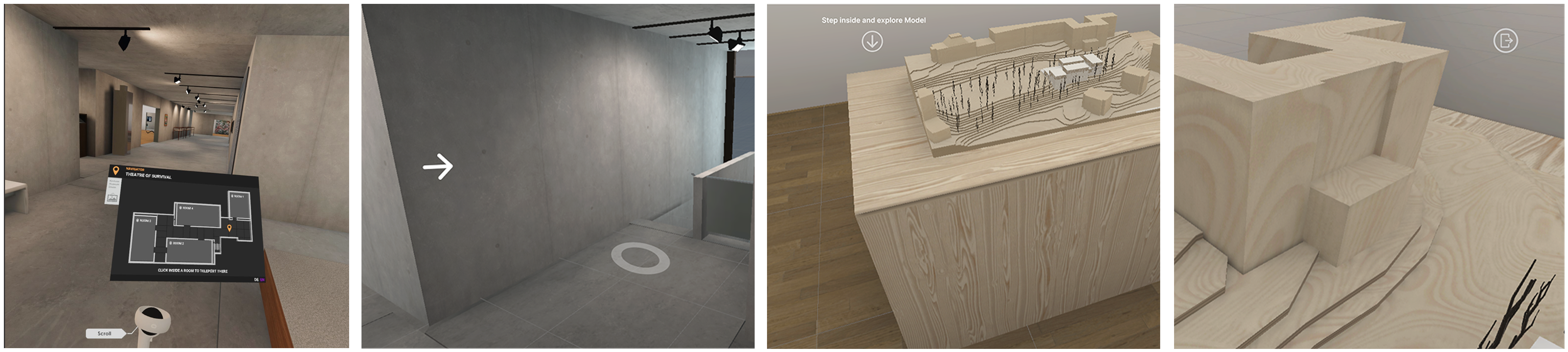

Key features I designed

- Direction arrows and a subtle floor reticle to show the next waypoint.

- An interactive scale-model: point at any room → teleport there.

- A floating “Exit” icon so nobody wondered how to leave.

Check out the live version →

Client project: Richner

Problem

Richner’s showrooms have limited floor space, so customers struggle to picture all the possible layouts, finishes, and room sizes for a new bathroom. Even in VR, early prototypes hid the controls in sub-menus, so shoppers kept asking staff how do I change the vanity?

Goal

Give customers a quick, self-serve way to tweak room size, style, lighting, and objects— whether they’re wearing a headset in the store or clicking through the web demo—so they can make decisions on the spot. 💫

Key features I designed

- Unified bottom toolbar: six clear tabs (Room Size, View, Style, Day-time, Objects, Home) that behave the same in VR and on the web.

- Live mini preview: a picture-in-picture window on the web app that mirrors the headset’s camera, so consultants can see exactly what the VR user sees and coach them.

- One-tap room presets: small / Medium / Large instantly resize the space and re-flow fixtures.

- Subtle hover / gaze states: first-time users immediately know what they can interact with.

Validation · Usability Testing

We conducted a think-aloud session at the Hegias office, where the VR headset view was mirrored to a laptop and wall screens—allowing the team to observe user interactions and gather live feedback.

what we focused on:

- Can first-time users find the toolbar and change room size without instructions

- Do web viewers understand what the person in VR is doing

- Are the hover and gaze states clear enough to show what’s interactive?

Impact

- Shoppers and remote viewers could make decisions together—no need to pass around the headset.

- Sales teams closed deals faster, as clients could see changes in true scale while friends or partners reviewed them online.

- The live preview feature became Richner’s go-to demo tool and is now standard in all new LAB showrooms.

Check out the live version →

My first day at Hegias 🌿💻✨